Investigating Facebook’s interventions against accounts that repeatedly share misinformation

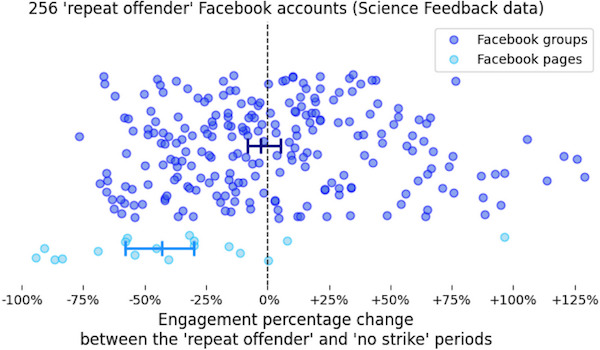

We investigate the implementation and consequences of Facebook’s policy against accounts that repeatedly share misinformation combining fact-checking and engagement metrics data. Using a Science Feedback and a Social Science One datasets, we identified a set of public accounts (groups and pages) that have shared misinformation repeatedly during the 2019–2020 period. We find that the engagement per post decreased significantly for Facebook pages after they shared two or more ‘false news’. The median decrease for pages identified with the Science Feedback dataset is 43%, while this value reaches 62% for pages identified using the Condor dataset.

We show that this ‘repeat offenders’ penalty did not apply to Facebook groups. Instead, we discover that groups have been affected in a different way with a sudden drop in their average engagement per post that occurred around June 9, 2020. While this drop has cut the groups’ engagement per post in about half, this decrease was compensated by the fact that these accounts have doubled their number of posts between early 2019 and summer 2020. The net result is that the total engagement on posts from ‘repeat offender’ accounts (including both pages and groups) returned to its early 2019 levels. Overall, Facebook’s policy thus appears to be able to contain the increase in misinformation shared by ‘repeat offenders’ rather than to decrease it.